Introduction to NetWorkSpaces

Installation

The following are instructions for installing your own copy of the

NetWorkSpaces (NWS) server and client. Since your home directory is the same on

all zoo nodes, it will suffice to do this just once on any one of them. Python and Twisted are already installed. If you plan to use a linux machine other than a zoo node, Python will almost certainly be installed, but you probably will have to install Twisted.

Server installation

- Create a directory for your Python modules, say

~/myInstalls/python.

- Download the

tgz or zip archive from the class web site and unpack it in a temporary directory (best not to use your module directory).

cd nws/python/open_server

python setup.py install --prefix= --home=~/myInstalls/python

- Test in a different directory, say

/tmp.

- Let Python know where to find the nws module:

export PYTHONPATH=~/myInstalls/python/lib/python

then, let 'er rip:

twistd -noy twistd -noy ~/myInstalls/python/nws.tac

2007/02/03 14:30 EST [-] Log opened.

2007/02/03 14:30 EST [-] twistd 2.1.0 (/usr/bin/python 2.4.2) starting up

2007/02/03 14:30 EST [-] reactor class: twisted.internet.selectreactor.SelectReactor

2007/02/03 14:30 EST [-] Loading /home/accts/njc2/myInstalls/python/nws.tac...

2007/02/03 14:30 EST [-] clientCode served from directory clientCode

2007/02/03 14:30 EST [-] clientCode directory doesn't exist

2007/02/03 14:30 EST [-] Loaded.

2007/02/03 14:30 EST [-] nwss.server.NwsFactory starting on 8765

2007/02/03 14:30 EST [-] Starting factory

2007/02/03 14:30 EST [-] twisted.web.server.Site starting on 8766

2007/02/03 14:30 EST [-] Starting factory

2007/02/03 14:30 EST [-] using temp directory /tmp

...

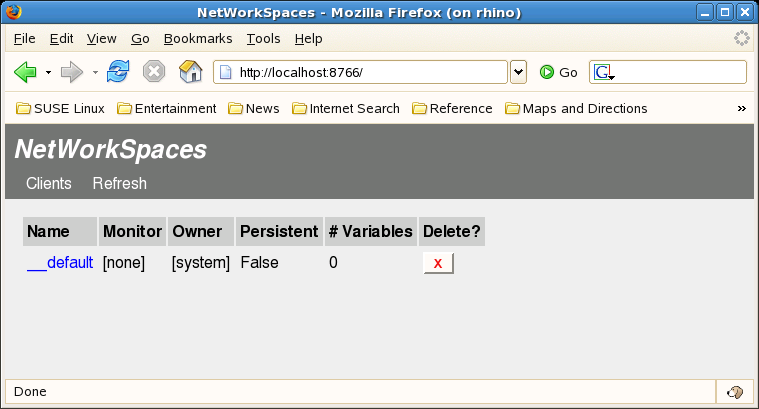

Start firefox (or other browser) on the same machine and enter the url localhost:8766. You should see something similar to:

Leave the server running until you are done testing. You can kill it with ˆC.

Client Installation

- In a new window, and back at your temporary install directory, execute:

cd nws/python/open_client

python setup.py install --prefix= --home=~/myInstalls/python

Then start the babelfish translator:

python ~/myInstalls/python/lib/python/nws/babelfish.py &

- As before, test in a different directory and let Python know where to find the nws module:

export PYTHONPATH=~/myInstalls/python/lib/python

- Now try it:

$ python

Python 2.4.2 (#1, Oct 13 2006, 17:11:24)

[GCC 4.1.0 (SUSE Linux)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import nws.client as nwsC

>>> ws = nwsC.NetWorkSpace('testing ...')

>>> ws.store('v', range(3))

>>> ws.fetch('v')

[0, 1, 2]

>>> ws.store('xyz', 123.456)

>>> ws.store('zyx', {'cat': 'dog', 'mouse': 'click'})

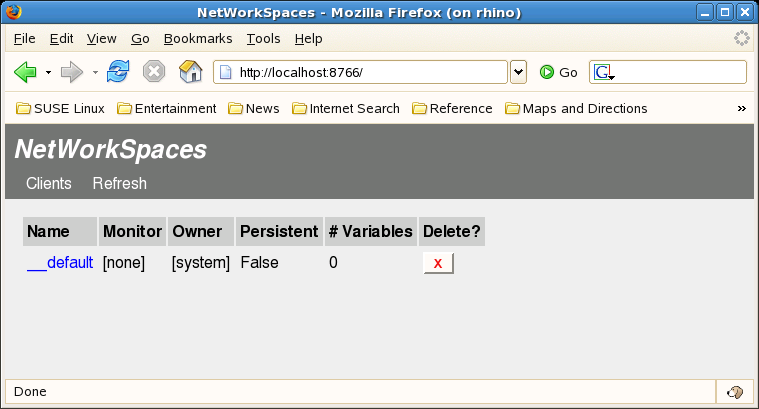

- Refresh the browser, you should now see your workspace. Clicking on the workspace should list the variables in it. Clicking on a variable should list the values bound to it.

Note: You may run into problems with port numbers. By default, the server uses 8765 for the service proper and 8766 for the web interface. If two of you are working on the same machine, you cannot both use the default ports. These are easily adjusted by editing the nws.tac file. If you change ports, you will have to make adjustment on the client side: i) when instantiating a workspace provide a serverPort=XXXX argument to the constructor (serverHost=foo.bar.baz will override the default host localhost), ii) babelfish.py accepts command line options -p XXXX and -h foo.bar.baz

Use

NWS is based on the concept of a set of bindings, known variously as an environment, a name space, a workspace, ... . A binding maps a name to a value. A given language will have rules about allowable names, allowable values for a given name, the context in which the binding is valid (scope), and so on. The language will also provide operations for establishing a binding and for retrieving the value of a bound name. Often these operations will be implied by the lexical structure of code, as will the intended binding set:

x = y

The RHS use of y implies a look up of the value bound to y and the x on the LHS will be the target of the assignment, i.e., x will be bound to whatever value is currently bound to y.

NWS is designed to be a coordination facility that is language neutral. NWS

clients exists for a variety of languages, including MATLAB, Perl, Python, R,

and Ruby. This neutrality offers advantages, chief among them: i) NWS

coordination patterns and idioms can be applied to any of these, ii) by using the quasi-lingua franca of ASCII strings to encode data, NWS can be used to coordinate heterogeneous ensembles of these.

It also implies certain costs, in particular it cannot (always) be as seamlessly integrated as native bindings.

Toss up: NWS names can be any ASCII string.

Thus, the above rendered in NWS-ese:

ws.store('x', ws.fetch('y'))

We have to make explicit the look-up/retrieval (fetch), the association of the name x with the retrieved value (store), and the intended binding set (ws object).

In many languages it is possible to neaten this up — the introductory lectures demonstrated a “cleaner” API for Python:

sv.x = sv.y

where sv is a shared variable container.

We will soon see, however, that the exigencies of coordination induce alterations to binding semantics. Creating the illusion of similarity when, in fact, there are important differences is a bad thing that leads to frequent violations of the principle of least astonishment.

Coordinated Binding Behavior

Consider our old friend:

x = y

What if y has not yet been defined? In the setting of a conventional sequential program, we could get junk data. A more helpful language might signal an error or raise an exception — after all, nobody else is going to come along and define y for us.

But in an ensemble setting, somebody very well might do just that. In other words, in the context of coordination, an unbound name has a perfectly valid (and useful) interpretation: “Please hold.” It doesn't have to have this interpretation, but it seems to make sense, so let's run with it.

Now consider:

x = 123

x = 456

In the setting of a conventional sequential program, it's reasonable to allow the second assignment to simply overwrite 123 with 456 — we're the only process in town, so who else is going to care about the old value?

But in an ensemble setting, lot's of other processes may be interested in the sequence of values bound to x. If so, how do we know a particular value of x has been put to good use? Enter generative communication: some coordination events generate data that exist independent of any process, others consume such data. Let's interpret the binding of a value to a name as the addition of that value to a list of values mapped to that name rather than the (possible) overwriting of a single associated value. Let's further stipulate that we do so by maintaining a FIFO queue of values. But how do we ever shed values? To complete the picture: retrieval of a value bound to a name removes one value from the queue. Again, it doesn't have to have this interpretation, but arguably this is a reasonable one.

In sum: an assignment records a value of interest, a retrieval consumes one value, an empty list of values triggers 'Please Hold' for a retrieval.

Let's see how well these play together. In one or more Python sessions, run the

following:

import nws

def f(x): return x*x*x

ws = nws.client.NetWorkSpace('table test')

while 1: ws.store('r', f(ws.fetch('x')))

In a separate Python session, run this:

import nws

ws = nws.client.NetWorkSpace('table test')

for x in range(10): ws.store('x', x)

for x in range(10): print 'f(%d) = %d'%(x, ws.fetch('r'))

Notes:

- Worker pre- (and post-) start.

- Number or workers variable.

- Value ordering — for a two-body ensemble.

Variations

That worked out nicely — but that's not very surprising. NWS was design

to support this sort of thing. What about other coordination patterns?

Consider maintaining a global maximum. Suppose many processes are cooperating

in a search to find a

value, xmax, that will maximize a function, F, and that

knowing that F's maximum is at least F+, we can rule out some

candidate x's. Further, let's assume F is expensive to evaluate, but the

winnowing check is cheap.

We would like to do something like:

for x in MyCandidateList:

currentMax = ws.fetch('max')

if noGo(currentMax, x): continue

y = f(x)

if y > currentMax: ws.store('max', y)

But that would be wrong ... .

-

fetch consumes a value that may not be replaced.

We would like a way to consult a variable without destroying the value:

find.

for x in MyCandidateList:

currentMax = ws.find('max')

if noGo(currentMax, x): continue

y = f(x)

if y > currentMax: ws.store('max', y)

But that would be wrong ... .

- Are we maintaining a single 'max'?

- Is

currentMax really current?

How about:

for x in MyCandidateList:

currentMax = ws.find('max')

if noGo(currentMax, x): continue

y = f(x)

currentMax = ws.fetch('max')

if y > currentMax: currentMax = y

ws.store('max', currentMax)

Better — typos aside, this should work. But suppose it is very unlikely

that even a “pre-qualified” x will result in a new maximum. Can we

reduce the coordination traffic?

There are other uses for find, the most common being

“write-once” variables: various data that are established at

the beginning of a computation, or are independent of any one computation and

that are needed by two or more ensemble members.

find alters the way the value queue is referenced, but what about

variations in the queue itself? NWS supports four “types” (aka “modes”):

- FIFO: the default

- LIFO: because you cannot have one without the other

- Non-deterministic: Back to our Linda roots

- Single: Not uncommon just to want the “last one”. Works well

with

find. Good for status values — simplifies monitors that

read them.

To specify one of these, use ws.declare()

Managing Workspaces

NWS supports multiple workspaces (analogous to multiple tuplespaces). We've seen

that the constructor takes the name of a workspace as its first argument. It

could also take a hostname and a port number to specify the server that will

host the workspace — you knew something like this had to be coming, without it

how could we form ensembles with parts running on distinct computers?

So if multiple processes each execute:

ws = nws.client.NetWorkSpace('snake pit', host='python.zoo.cs.yale.edu')

they would all have access to the same workspace.

But who would own it? And why would that matter?

Answers: By default, the process that first mentions the workspace to the server

owns it. We need to clean up, and traditional gc doesn't really apply.

Workspaces, like tuplespaces, can be persistent. In practice this can quickly

lead to a mess, so they are by default transitory: when the process that owns

them exits, they exit. This can make staging an ensemble a bit of a pain, even

if the general idea is right. So “use” vs “open”.

Workspace naming presents a bit of a challenge: how to avoid collisions?

Consider, for example, multiple instances of an ensemble application using the

same NWS server.

mktempWs works something like mkdtemp. It returns a

reference to a workspace whose name is guaranteed to be unique on the

server. Note: once we've got our own workspace, we can use variables names

within it without worrying about those colliding with some other

ensemble program.